Downloading

Recently, I had to retrieve Sequencing data in fastQ format belonging to a paper from Law et al. It was for one of two remaining mini-projects standing before me and my PhD.

Mainly they’re for applying my gene centric approach (Watch out for the next part it’ll be released soon!) to a time series dataset of total RNA and for a enriched reactor core.

So it begins with the following line in the publication:

All raw metagenome, metatransriptome and amplicon sequencing data used in this study are publicly available from NCBI under BioProject ID: PRJNA320780 (http://www.ncbi.nlm.nih.gov/bioproject/320780).

- metagenome ie DNA

- metatranscriptome ie. total RNA

- amplicon 16S only

Sounds easy now aint it, go to link, click on download and you’ll get everything you need. Well it wasn’t. =(

Previously my experience with downloading of NCBI has been mostly their web portal via a browser not much programmatically.

Day 1: Getting the Files

K, calm down all I need now is a link to wget or curl the files. No problem I’ve heard of the SRA format, SRA stands for Sequence Read Archives nothing is gonna stop me.

On the Bioproject’s page I saw i had about 40 SRA files to fetch…

Hmmmm. Do I click and download them by hand? “Of course not, I know how to write scripts why should I do this by hand”, I thought.

![]()

After some digging around for ways to get the download link I found this: Entrez Direct: E-utilities on the UNIX Command Line

To install the tool you’ll need install some perl modules first: (forgive the PERL cause everyone knows perl is like never going away in Bioinformatics)

you’ll probably need to CPAN some modules (I recommend installing cpanminus aka cpanm) Perl’s unofficial package manger

Yes so thats a bunch of perl modules to install Net::FTP

cd ~

perl -MNet::FTP -e \

'$ftp = new Net::FTP("ftp.ncbi.nlm.nih.gov", Passive => 1);

$ftp->login; $ftp->binary;

$ftp->get("/entrez/entrezdirect/edirect.tar.gz");'

gunzip -c edirect.tar.gz | tar xf -

rm edirect.tar.gz

export PATH=$PATH:$HOME/edirect

./edirect/setup.sh

After installing this well you could finally start downloading the SRA… (you wished)

Digging through the website it was easy to find the button to download the SRAs, but getting the links to all 40 SRAs programmatically, not so easy!

And yeap I was pretty much right, after looking for a way to get the runInfo.csv

Day2 : The saga continues: Do dont need to download the files

EDIRECT=/path/2/eDirect

cd $EDIRECT

esearch -db sra -query PRJNA320780 | ./tools/edirect/efetch --format runinfo

which looks like this:

| Run | ReleaseDate | LoadDate | spots | bases | spots_with_mates | avgLength | size_MB | AssemblyName | download_path | Experiment | LibraryName | LibraryStrategy | LibrarySelection | LibrarySource | LibraryLayout | InsertSize | InsertDev | Platform | Model | SRAStudy | BioProject | Study_Pubmed_id | ProjectID | Sample | BioSample | SampleType | TaxID | ScientificName | SampleName | g1k_pop_code | source | g1k_analysis_group | Subject_ID | Sex | Disease | Tumor | Affection_Status | Analyte_Type | Histological_Type | Body_Site | CenterName | Submission | dbgap_study_accession | Consent | RunHash | ReadHash |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SRR3501849 | 2016-05-18 11:35:07 | 2016-05-13 11:31:37 | 25818676 | 7797240152 | 25818676 | 302 | 4224 | NA | https://sra-download.ncbi.nlm.nih.gov/srapub/SRR3501849 | SRX1759558 | 844 | WGS | RANDOM | METAGENOMIC | PAIRED | 0 | 0 | ILLUMINA | Illumina HiSeq 2500 | SRP075031 | PRJNA320780 | 2 | 320780 | SRS1435427 | SAMN04957382 | simple | 942017 | activated sludge metagenome | UPWRP_SW_d1_r1 | NA | NA | NA | NA | NA | NA | no | NA | NA | NA | NA | NA | SRA425235 | NA | public | 8C81A9CE61F9010A73220794D655E084 | 0AE4D27EB24ECF49E094557AD7255216 |

| SRR3501850 | 2016-05-18 11:50:28 | 2016-05-13 11:46:22 | 31189839 | 9419331378 | 31189839 | 302 | 5112 | NA | https://sra-download.ncbi.nlm.nih.gov/srapub/SRR3501850 | SRX1759559 | 845 | WGS | RANDOM | METAGENOMIC | PAIRED | 0 | 0 | ILLUMINA | Illumina HiSeq 2500 | SRP075031 | PRJNA320780 | 2 | 320780 | SRS1435428 | SAMN04957383 | simple | 942017 | activated sludge metagenome | UPWRP_SW_d2_r1 | NA | NA | NA | NA | NA | NA | no | NA | NA | NA | NA | NA | SRA425235 | NA | public | E649F6CDCC80915B98BE85CD437B7EFE | B58C5296FB135FCF2E9BFD8544C33B29 |

| SRR3501851 | 2016-05-18 11:47:02 | 2016-05-13 11:42:17 | 31966019 | 9653737738 | 31966019 | 302 | 5244 | NA | https://sra-download.ncbi.nlm.nih.gov/srapub/SRR3501851 | SRX1759560 | 945 | WGS | RANDOM | METAGENOMIC | PAIRED | 0 | 0 | ILLUMINA | Illumina HiSeq 2500 | SRP075031 | PRJNA320780 | 2 | 320780 | SRS1435429 | SAMN04957392 | simple | 942017 | activated sludge metagenome | UPWRP_SW_d1_r2 | NA | NA | NA | NA | NA | NA | no | NA | NA | NA | NA | NA | SRA425235 | NA | public | 81EC07EC8BC6509DBCB00BC4FA7401A9 | 9AD8B926EF9D20E3A2FD10582C72B592 |

| SRR3501852 | 2016-05-18 12:02:10 | 2016-05-13 11:57:54 | 29331148 | 8858006696 | 29331148 | 302 | 4854 | NA | https://sra-download.ncbi.nlm.nih.gov/srapub/SRR3501852 | SRX1759561 | 946 | WGS | RANDOM | METAGENOMIC | PAIRED | 0 | 0 | ILLUMINA | Illumina HiSeq 2500 | SRP075031 | PRJNA320780 | 2 | 320780 | SRS1435430 | SAMN04957393 | simple | 942017 | activated sludge metagenome | UPWRP_SW_d2_r2 | NA | NA | NA | NA | NA | NA | no | NA | NA | NA | NA | NA | SRA425235 | NA | public | 63B30D9EC717121777A138CECA1F1ACA | 35A116CCE17CBA7F425465AA9D7DBB6B |

| SRR3501853 | 2016-05-18 11:50:18 | 2016-05-13 11:46:11 | 34045865 | 10281851230 | 34045865 | 302 | 5630 | NA | https://sra-download.ncbi.nlm.nih.gov/srapub/SRR3501853 | SRX1759562 | 947 | WGS | RANDOM | METAGENOMIC | PAIRED | 0 | 0 | ILLUMINA | Illumina HiSeq 2500 | SRP075031 | PRJNA320780 | 2 | 320780 | SRS1435431 | SAMN04957394 | simple | 942017 | activated sludge metagenome | UPWRP_SW_d3_r2 | NA | NA | NA | NA | NA | NA | no | NA | NA | NA | NA | NA | SRA425235 | NA | public | 3AEB6D6C4FE383F80D1E16E588C2D374 | 876D1E61221339EF202EAAEC93AD0C5C |

| SRR3501854 | 2016-05-18 11:46:21 | 2016-05-13 11:41:11 | 29717524 | 8974692248 | 29717524 | 302 | 4935 | NA | https://sra-download.ncbi.nlm.nih.gov/srapub/SRR3501854 | SRX1759563 | 948 | WGS | RANDOM | METAGENOMIC | PAIRED | 0 | 0 | ILLUMINA | Illumina HiSeq 2500 | SRP075031 | PRJNA320780 | 2 | 320780 | SRS1435432 | SAMN04957395 | simple | 942017 | activated sludge metagenome | UPWRP_SW_d4_r2 | NA | NA | NA | NA | NA | NA | no | NA | NA | NA | NA | NA | SRA425235 | NA | public | D467387C3A275485CC8EA2025E6044ED | 9EB031A8BDAD3C2135E92CF3DBB29169 |

Great the links to the SRAs are in the column download_path

So by the way I found this awesome download script which combined pycurl + tqdm (friend recommended me this, if you were wondering what tqdm stands for, it means “progress” in Arabic: taqadum)

import os

import pycurl

from tqdm import tqdm

downloader = pycurl.Curl()

def sanitize(c):

c.setopt(pycurl.UNRESTRICTED_AUTH, False)

c.setopt(pycurl.HTTPAUTH, pycurl.HTTPAUTH_ANYSAFE)

c.setopt(pycurl.ACCEPT_ENCODING, b'')

c.setopt(pycurl.TRANSFER_ENCODING, True)

c.setopt(pycurl.SSL_VERIFYPEER, True)

c.setopt(pycurl.SSL_VERIFYHOST, 2)

c.setopt(pycurl.SSLVERSION, pycurl.SSLVERSION_TLSv1)

#c.setopt(pycurl.FOLLOWLOCATION, False)

c.setopt(pycurl.FOLLOWLOCATION, True)

def do_download(url, local, *, safe=True):

rv = False

with tqdm(desc=url, total=1, unit='b', unit_scale=True) as progress:

xfer = XferInfoDl(url, progress)

if safe:

local_tmp = local + '.tmp'

else:

local_tmp = local

c = downloader

c.reset()

sanitize(c)

c.setopt(pycurl.NOPROGRESS, False)

c.setopt(pycurl.XFERINFOFUNCTION, xfer)

c.setopt(pycurl.URL, url.encode('utf-8'))

with open(local_tmp, 'wb') as out:

c.setopt(pycurl.WRITEDATA, out)

try:

c.perform()

except pycurl.error:

os.unlink(local_tmp)

return False

if c.getinfo(pycurl.RESPONSE_CODE) >= 400:

os.unlink(local_tmp)

else:

if safe:

os.rename(local_tmp, local)

rv = True

progress.total = progress.n = progress.n - 1

progress.update(1)

return rv

class XferInfoDl:

def __init__(self, url, progress):

self._tqdm = progress

def __call__(self, dltotal, dlnow, ultotal, ulnow):

n = dlnow - self._tqdm.n

self._tqdm.total = dltotal or guess_size(dlnow)

if n:

self._tqdm.update(n)

def guess_size(now):

''' Return a number that is strictly greater than `now`,

but likely close to `approx`.

'''

return 1 << now.bit_length()

K so I’ve downloaded the SRA files, I just need to extract the fq from the SRA. Which brings to the SRAtoolkit

Its basically a collection of cmd line tools to deal with the SRA files, what we’re really interested with is fastq-dump

Its not exactly clear in NCBI’s readme, but here’s what it does fastq-dump tries to automatically download the SRAs again even though you’ve got the local file ready.

Running fastq-dump -v shows you its trying to download from NCBI.

The rationale for this I assume is to prevent corrupted files since there’s another tool in the toolkit vdb-validate ./<filename>.sra which checks its integrity.

You could read the whole issues thread but I think this user’s frustration just sums it up for me as well.

@klymenko That is unacceptable. I do not need alignments. just the raw fastq files. This has nothing to do with RefSeq files. Further, neither fastq-dump -h nor online man pages say anything about accompanying refseq files. It simply says you can act on local SRA files. Further, all of the above validation tools approve of the downloaded SRA file

The owner of the repo goes on to threaten the poor fella who’s just like me trying to download file

If you want help, please ask. If you want to flame, then I’ll close the issue.

LONG SIGH

So the prescribed way of doing this is actually to run the following if you havent downloaded the SRA.

prefetch <SRA ID>

fastq-dump <SRA ID>

Yes you won’t even have to go thru downloading 1. entrez tool to get the 2. runInfo.csv with the links to get the 3. SRA files.

And if you’ve already downloaded a local SRA file like me, you will have to run prefetch to check the local file, my guess is it stores the location for fastq-dump to recognise.

prefetch <localFile>

fastq-dump <localFile>

The story deepens, turns out the extraction of the fq from the SRA is excruciatingly slow and its not just me

It’s been running for about 3 hours and so far extracted ~15GB of what I expect to be around 60GB. An improvement, but still not exactly fast…

Looked around for other solutions to speed this up and game across the gnu parallel tool.

parallel fastq-dump --split-files -F --gzip {} ::: *.sra

but it doesnt really solve anything since each file still has to be extracted by 1 thread.

Thank god, later i stumbled across parallel-fastq-dump which makes use of the -N and -X flags in the original fastq-dump which

splits the extraction over different ranges so it can be parallelized.

parallel-fastq-dump --sra-id SRR3501865 -F --threads 20 --outdir ../unzipped --split-files --gzip --tmpdir /scratch/uesu/

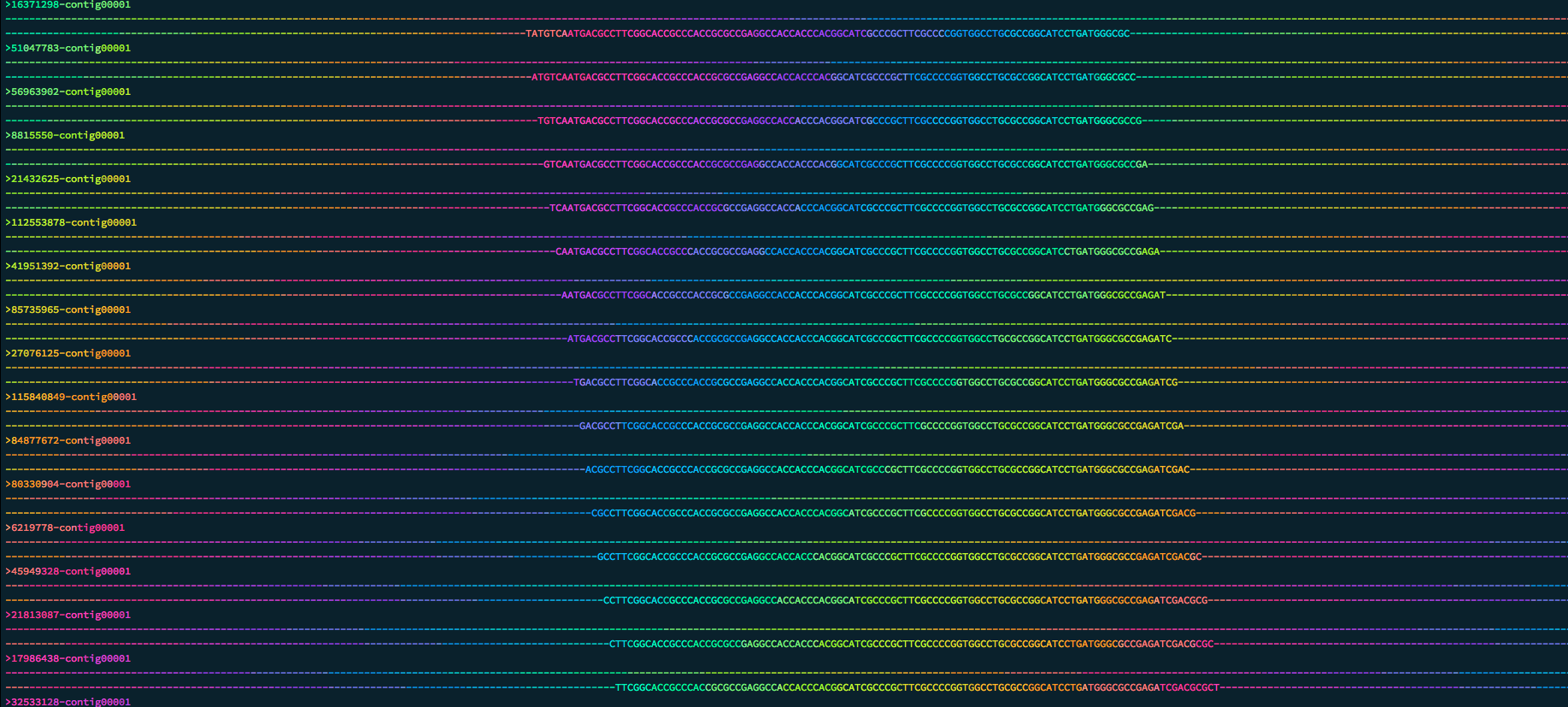

The results are stunning

Conclusion

Thats all folks, the moral of the story will be to try and avoid downloading through NCBI if u can but straight from the source if possible. Have a good one!

comments powered by Disqus